Bringing augmented reality into clinical practice

February 05, 2019

by John W. Mitchell, Senior Correspondent

In popular culture, Pokémon Go is probably the most well-known example of augmented reality, a new type of technology that takes actual physical environments and overlays virtual components. But using your iPhone to capture cartoon characters around the neighborhood is just one example of what AR can do, and medical imaging is full of promising applications.

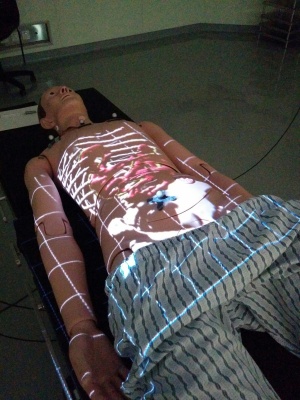

HealthCare Business News spoke to Ian Watts, a computing science graduate student at the University of Alberta to find out about an AR application he developed called ProjectDR, which allows CT and MR scans to be displayed directly on a patient's body in a way that moves with the patient.

HCB News: As you developed this technology, what was the need that you were trying to meet?

Ian Watts: AR is a relatively new field with a lot of excitement around it, so we are looking to explore whether AR systems are viable for medical applications. We can produce more intuitive ways to interact with medical data in real time, increase perception and potentially lead to improvements in patient outcomes.

The specific need we are trying to meet with ProjectDR is to reduce the difficulty in locating and working with anatomy under the skin by providing more information to the clinician and providing an improved experience for the patient. Information can be gained from viewing medical images on a monitor, but it can still be challenging to use that information while working with a patient.

Our goal was to develop a tool for clinicians performing manipulations on a patient's spine. The clinician must find the correct vertebrae to work with by palpating or using visual indicators on the patient's skin. This is a challenging task which leaves the clinician prone to errors. With ProjectDR, the medical images can be overlaid and mapped to the patient to provide clear visuals of their spine and greater spatial awareness of the rest of their anatomy.

HCB News: How did the actual development process go? Were there any particularly challenging hurdles?

IW: The development process for ProjectDR was iterative and progressed through various stages of testing combinations of hardware. The first concept was created as a class project in the computing science department using a handheld laser projector, motion capture cameras and markers for tracking. It could only display basic 3D models. However, it worked well enough to give us a solid prototype to improve upon and modify.

The next version was created with substantially improved graphical features, such as volume rendering for displaying CT scan and MR images. The handheld projector was changed to a larger and brighter LED projector and we built a sturdy frame to suspend and move all of the parts overhead of a table work space. The current iteration features a user-friendly interface and many quality of life improvements. There is also the ability to interface with more types of hardware for motion and eye tracking and depth sensors like the Microsoft Kinect and Magic Leap.

One of the specific issues we encountered was finding a suitable projector to use. We initially used a laser projector because of its ability to be in focus at all levels of depth at the same time, something not possible for a regular projector. This was not feasible due to laser projectors not being widely available at the size and cost we wanted, and the handheld model did not have the illumination required to work in a lit room. Switching to an LED projector limited the area which can be in focus, but this has not been an issue with the size of spaces we have been using. The increased illumination and reduced cost have been worth the trade-off.

HCB News: What are some of the areas where you're trying to improve or perfect the system?

IW: In order for a system to be used for medical applications it must be consistent and accurate. We have been making improvements to the calibration process of aligning the projector and motion capture systems by trying different algorithms and reducing user input error. Another work in progress is improving and automating the registration between the virtual images and their physical targets. This reduces the set-up time and difficulty of usage along with improvements to the user interface. There are no firmly established standards for how an AR system should work since they are new and different from each other, which leaves lots of room for improvement.

Other AR researchers have also been making advancements that can be implemented in the future. An example would be elegant ways to handle the occlusion that occurs when an untracked object moves in front of the projection target.

HCB News: What type of medical uses does ProjectDR already have? Are there other applications you see down the line?

IW: ProjectDR is a general purpose tool that can be used to display images on tracked targets, so there are many potential uses. As I mentioned before, we’ve been working on using the system for adjustments to the spine. They have to use medical images to identify a position and direction to apply a force to the spine. There is a lot of variability between people so it can be quite challenging to find internal structures, even ones near the surface like vertebrae.

With ProjectDR, the patient lies on a table and has the imaging data of their spine projected onto their back, giving the clinician reference points for locating the desired vertebrae and where to apply the force. This can reduce the amount of time spent locating, reduce the need for palpation and mitigate errors. A simple extension of this would be to use the system for training. It enables learning anatomy in a more tangible and interactive way than a textbook or model.

Another application is for preoperative surgical planning. Segmented CT scan images can be displayed on the patient or surgical model and be used to plan the procedure with a surgical team. This allows the relevant anatomy to be displayed, moved or hidden as the planning occurs, and viewed from different perspectives. For example, planning the removal of a lung tumour could involve displaying an image on a surgical model where part of the ribs and lung are hidden as the plan progresses so that the tumour is visible along with veins and arteries relevant to the surgery. Modifications to the medical images can be projected onto the patient to preview changes from a procedure as well. This would be applicable in plastic and reconstructive surgery where visual aids are important.

In the future the technology could be applied to minimally-invasive surgical applications like laparoscopic surgery. This surgery is performed by inserting cameras and tools into the patient’s abdomen and viewing the operation on a monitor away from the patient and surgical site. Viewing the operation through a camera and monitor removes visual depth cues and limits the field of view.

HCB News: From a big picture perspective, how disruptive do you think augmented reality technology can be for healthcare and imaging in particular?

IW: AR has the potential to be quite disruptive. It has already captured many people’s imaginations but more applications still need to be built and tested. AR can incorporate people’s own devices into their healthcare and make it more personal and convenient.

Prices of the tools used for AR, like motion trackers, are rapidly dropping, which will make AR systems much more common. Depth sensors like the Kinect are now widely available and have even been appearing in cell phones. Graphical processing units have been advancing rapidly thanks to the advancements in other fields.

Training and simulation in healthcare can benefit from AR since it can reduce costs by replacing expensive physical models with virtual ones. Training can be made more interactive and fun but perhaps most importantly allow for better performance metrics to be developed and tracked.

For imaging, AR could improve communication between imaging experts and the people performing procedures, as well as the patients. There will also be more collaborative applications where many people can participate, including remote or virtual participants. AR provides more ways for images to be visualized and interacted with in 3D instead of on a 2D monitor.

HCB News: Are there other augmented reality projects underway at the University of Alberta, or elsewhere, that you are also excited about?

IW: One of the other projects in our lab is the Medbike. It is a cardiovascular rehab project to get patients to exercise in their home by using a stationary bike to ride through a virtual world. Patients can have trouble keeping up with their rehabilitation exercises on their own or have difficulty travelling to a rehab centre while they are weakened or during the winter. This AR system makes rehab in their home more enjoyable while also collecting blood pressure and other data under the remote supervision of a healthcare worker.

HealthCare Business News spoke to Ian Watts, a computing science graduate student at the University of Alberta to find out about an AR application he developed called ProjectDR, which allows CT and MR scans to be displayed directly on a patient's body in a way that moves with the patient.

HCB News: As you developed this technology, what was the need that you were trying to meet?

Ian Watts: AR is a relatively new field with a lot of excitement around it, so we are looking to explore whether AR systems are viable for medical applications. We can produce more intuitive ways to interact with medical data in real time, increase perception and potentially lead to improvements in patient outcomes.

The specific need we are trying to meet with ProjectDR is to reduce the difficulty in locating and working with anatomy under the skin by providing more information to the clinician and providing an improved experience for the patient. Information can be gained from viewing medical images on a monitor, but it can still be challenging to use that information while working with a patient.

Our goal was to develop a tool for clinicians performing manipulations on a patient's spine. The clinician must find the correct vertebrae to work with by palpating or using visual indicators on the patient's skin. This is a challenging task which leaves the clinician prone to errors. With ProjectDR, the medical images can be overlaid and mapped to the patient to provide clear visuals of their spine and greater spatial awareness of the rest of their anatomy.

HCB News: How did the actual development process go? Were there any particularly challenging hurdles?

IW: The development process for ProjectDR was iterative and progressed through various stages of testing combinations of hardware. The first concept was created as a class project in the computing science department using a handheld laser projector, motion capture cameras and markers for tracking. It could only display basic 3D models. However, it worked well enough to give us a solid prototype to improve upon and modify.

The next version was created with substantially improved graphical features, such as volume rendering for displaying CT scan and MR images. The handheld projector was changed to a larger and brighter LED projector and we built a sturdy frame to suspend and move all of the parts overhead of a table work space. The current iteration features a user-friendly interface and many quality of life improvements. There is also the ability to interface with more types of hardware for motion and eye tracking and depth sensors like the Microsoft Kinect and Magic Leap.

One of the specific issues we encountered was finding a suitable projector to use. We initially used a laser projector because of its ability to be in focus at all levels of depth at the same time, something not possible for a regular projector. This was not feasible due to laser projectors not being widely available at the size and cost we wanted, and the handheld model did not have the illumination required to work in a lit room. Switching to an LED projector limited the area which can be in focus, but this has not been an issue with the size of spaces we have been using. The increased illumination and reduced cost have been worth the trade-off.

HCB News: What are some of the areas where you're trying to improve or perfect the system?

IW: In order for a system to be used for medical applications it must be consistent and accurate. We have been making improvements to the calibration process of aligning the projector and motion capture systems by trying different algorithms and reducing user input error. Another work in progress is improving and automating the registration between the virtual images and their physical targets. This reduces the set-up time and difficulty of usage along with improvements to the user interface. There are no firmly established standards for how an AR system should work since they are new and different from each other, which leaves lots of room for improvement.

Other AR researchers have also been making advancements that can be implemented in the future. An example would be elegant ways to handle the occlusion that occurs when an untracked object moves in front of the projection target.

HCB News: What type of medical uses does ProjectDR already have? Are there other applications you see down the line?

IW: ProjectDR is a general purpose tool that can be used to display images on tracked targets, so there are many potential uses. As I mentioned before, we’ve been working on using the system for adjustments to the spine. They have to use medical images to identify a position and direction to apply a force to the spine. There is a lot of variability between people so it can be quite challenging to find internal structures, even ones near the surface like vertebrae.

With ProjectDR, the patient lies on a table and has the imaging data of their spine projected onto their back, giving the clinician reference points for locating the desired vertebrae and where to apply the force. This can reduce the amount of time spent locating, reduce the need for palpation and mitigate errors. A simple extension of this would be to use the system for training. It enables learning anatomy in a more tangible and interactive way than a textbook or model.

Another application is for preoperative surgical planning. Segmented CT scan images can be displayed on the patient or surgical model and be used to plan the procedure with a surgical team. This allows the relevant anatomy to be displayed, moved or hidden as the planning occurs, and viewed from different perspectives. For example, planning the removal of a lung tumour could involve displaying an image on a surgical model where part of the ribs and lung are hidden as the plan progresses so that the tumour is visible along with veins and arteries relevant to the surgery. Modifications to the medical images can be projected onto the patient to preview changes from a procedure as well. This would be applicable in plastic and reconstructive surgery where visual aids are important.

In the future the technology could be applied to minimally-invasive surgical applications like laparoscopic surgery. This surgery is performed by inserting cameras and tools into the patient’s abdomen and viewing the operation on a monitor away from the patient and surgical site. Viewing the operation through a camera and monitor removes visual depth cues and limits the field of view.

HCB News: From a big picture perspective, how disruptive do you think augmented reality technology can be for healthcare and imaging in particular?

IW: AR has the potential to be quite disruptive. It has already captured many people’s imaginations but more applications still need to be built and tested. AR can incorporate people’s own devices into their healthcare and make it more personal and convenient.

Prices of the tools used for AR, like motion trackers, are rapidly dropping, which will make AR systems much more common. Depth sensors like the Kinect are now widely available and have even been appearing in cell phones. Graphical processing units have been advancing rapidly thanks to the advancements in other fields.

Training and simulation in healthcare can benefit from AR since it can reduce costs by replacing expensive physical models with virtual ones. Training can be made more interactive and fun but perhaps most importantly allow for better performance metrics to be developed and tracked.

For imaging, AR could improve communication between imaging experts and the people performing procedures, as well as the patients. There will also be more collaborative applications where many people can participate, including remote or virtual participants. AR provides more ways for images to be visualized and interacted with in 3D instead of on a 2D monitor.

HCB News: Are there other augmented reality projects underway at the University of Alberta, or elsewhere, that you are also excited about?

IW: One of the other projects in our lab is the Medbike. It is a cardiovascular rehab project to get patients to exercise in their home by using a stationary bike to ride through a virtual world. Patients can have trouble keeping up with their rehabilitation exercises on their own or have difficulty travelling to a rehab centre while they are weakened or during the winter. This AR system makes rehab in their home more enjoyable while also collecting blood pressure and other data under the remote supervision of a healthcare worker.